Natural Language Processing (NLP) has revolutionized the way we interact with computers, enabling them to understand and interpret natural human language in ways previously thought impossible. Whether it’s virtual assistants, language translation, or speech recognition, NLP is powering the next generation of intelligent applications.

In this article, I will show you how to create powerful language models with Python, and take your NLP skills to the next level. By the end of this tutorial, you will have the knowledge and tools to create your own language models. So, let’s get started and master NLP together!

Let’s start by exploring the concept of a language model and building one with python.

What is a language Model?

A language model is a probability distribution over a sequence of words.

In simpler words, it is a model that learns to predict the probability of a sequence of words.

Let’s play with some examples of “sequence of words”

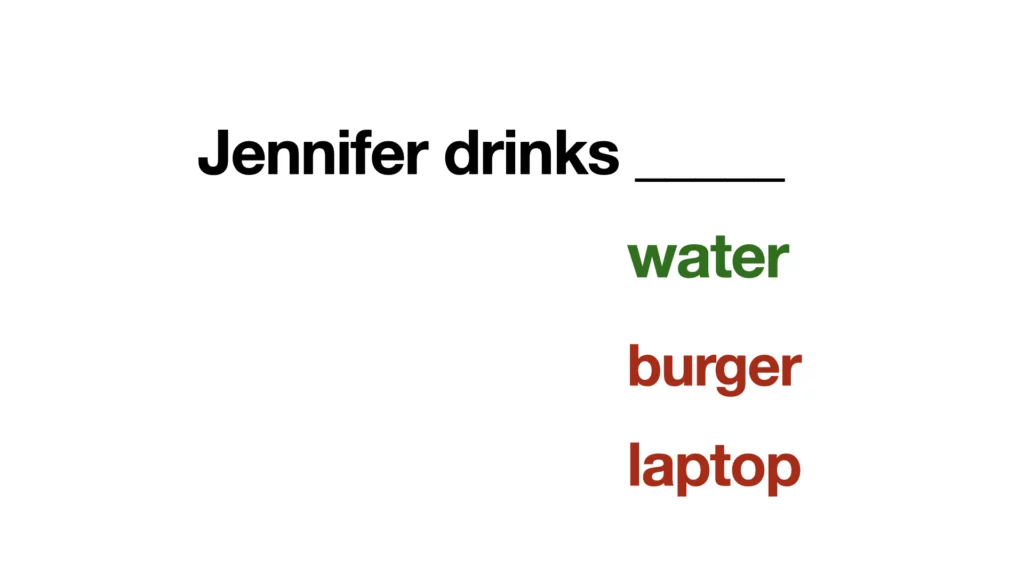

Which sequence of words is more accurate?

A. John likes to play

B. Play John likes

The first example follows a word order grammar rule (SVO) Subject - Verb - Object

The second example doesn’t.

Correct answer is A

Language Modeling is used in several Natural language processing projects like machine translation, auto-complete, auto-correct and speech recognition systems.

Types of Language Models

Rule-based models

Rule-based models are language models that use a set of hand-crafted rules to generate and interpret natural language. These models can be effective for simple tasks but are often limited by their reliance on explicit rules.

Statistical Language Models

Statistical language models use statistical techniques like probabilistic algorithms and linguistic rules to learn to predict the probability of a sequence of words.

Examples are N-grams, Hidden Markov Models (HMM)

Neural Language Models

Neural language models use different neural networks and deep learning algorithms to analyze and interpret natural language. These models can achieve state-of-the-art results.

Neural language models are often more complex than statistical models and they require large amounts of training data.

Examples include; Recurrent Neural Networks (RNNs). RNNs are good at modeling long-term dependencies between words in a sentence.

Transformer Models: Transformer models use self-attention mechanisms to process sequential data.

Examples of transformer models are BERT (Bidirectional Encoder Representations from Transformers) and GPT (Generative Pretrained Transformer).

Hybrid Models

Hybrid language models combine multiple approaches, such as rule-based, statistical, and neural models.

Knowledge-based Models

Knowledge-based models use structured data, such as ontologies and semantic networks, to analyze and generate natural language. These models are effective for tasks that require a deep understanding of language semantics.

Let’s jump right into it with a few examples using Python.

Unlocking the Power of Language: Building an N-Gram Language Model with Python

What are N-grams?

N-grams refer to a series or sequence of N consecutive tokens or words.

There are several types of N-grams based on the number of tokens or words in the sequence:

- Unigrams: These are N-grams with a single token or word.

- Bigrams: These are N-grams with two tokens or words.

- Trigrams: These are N-grams with three tokens or words.

- 4-grams (Quadgrams): These are N-grams with four tokens or words.

- 5-grams (Pentagrams): These are N-grams with five tokens or words.

- N-grams with higher values of N, such as 6-grams (Hexagrams), 7-grams (Heptagrams), and so on.

The choice of N in N-grams depends on the application and the complexity of the language. For example, bigrams and trigrams are commonly used in language modeling tasks, while higher-order N-grams may be used for more complex language analysis.

For an example, consider the following sentence:

"The big brown fox jumped over the fence"

- Unigrams would be:

"The", "big", "brown", "fox", "jumped", "over", "the", "fence" - Bigram:

"The big", "big brown", "brown fox", "fox jumped", "jumped over", "over the", "the fence" - Trigram:

"The big brown", "big brown fox", "brown fox jumped", "fox jumped over", "jumped over the", "over the fence" - 4-gram (Quadgram):

"The big brown fox", "big brown fox jumped", "brown fox jumped over", "fox jumped over the", "jumped over the fence" - 5-gram (Pentagram):

"The big brown fox jumped", "big brown fox jumped over", "brown fox jumped over the", "fox jumped over the fence" - 6-gram (Hexagram):

"The big brown fox jumped over", "big brown fox jumped over the", "brown fox jumped over the fence"

Example: Predict the next word

To predict the next word in a sentence, we can use a trigram model (N=3)

This model evaluates the likelihood of every potential next word based on the two previous words. This is achieved by calculating the frequency of each trigram in a training corpus and subsequently estimating the probability of each trigram.

Now that we understand what N-grams are, let’s move on to implementing N-gram models with Python.

Install NLTK using pip

pip install nltkWe will be using the Reuters corpus, which is a collection of news documents.

download the necessary data:

import nltk

nltk.download('punkt')

nltk.download('reuters')

from nltk.corpus import reuters

from nltk import ngrams, FreqDist

# Load the Reuters corpus

corpus = reuters.words()

# Tokenize the corpus into trigrams

n = 3

trigrams = ngrams(corpus, n)

# Count the frequency of each trigram

fdist = FreqDist(trigrams)To begin, we load the Reuters corpus using the reuters.words() function, which returns a list of words in the corpus.

Afterward, we utilize the ngrams() function to create trigrams by tokenizing the corpus, with the function accepting two arguments: the corpus itself and N (in this case, 3 for trigrams).

we count the frequency of each trigram using the FreqDist() function.

With the frequency distribution of the trigrams, we can calculate probabilities and make predictions.

# Define the context of the sentence we want to predict

context = ('we', 'are')

# Get the list of possible next words and their frequencies

next_words = [x[0][2] for x in fdist.most_common() if x[0][:2] == context]

# Print the next word

print(next_words, end=' ')

Building a Neural Language Model (RNNs) using Keras library in Python

Let’s train a recurrent neural network (RNNs) language model using the Keras library in Python to predict the next word in a sentence

First, we need to prepare the training data. We will use a text corpus to train our language model. For this example, let’s use a small text corpus consisting of five sentences.

# Import the necessary libraries

from keras.preprocessing.text import Tokenizer

from keras.utils import to_categorical, pad_sequences

import numpy as np

# Define the text corpus

text_corpus = [

'She drinks coffee every morning.',

'She drinks tea in the afternoon.',

'She drinks water all day long.',

'She drinks wine in the evening.'

]

# Tokenize the text corpus

tokenizer = Tokenizer()

tokenizer.fit_on_texts(text_corpus)

sequences = tokenizer.texts_to_sequences(text_corpus)

# Pad the sequences to a fixed length

max_sequence_length = max([len(seq) for seq in sequences])

padded_sequences = pad_sequences(sequences, maxlen=max_sequence_length, padding='pre')

# Prepare the input and output sequences

X = padded_sequences[:,:-1]

y = to_categorical(padded_sequences[:,-1], num_classes=len(tokenizer.word_index)+1)Next step is to define our recurrent neural network language model. We will use an LSTM (Long Short-Term Memory) layer to learn the temporal dependencies between words.

# Import the necessary libraries

from keras.models import Sequential

from keras.layers import Embedding, LSTM, Dense

# Define the model

model = Sequential()

model.add(Embedding(input_dim=len(tokenizer.word_index)+1, output_dim=50, input_length=max_sequence_length-1))

model.add(LSTM(100))

model.add(Dense(len(tokenizer.word_index)+1, activation='softmax'))

# Compile the model

model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

# Train the model

model.fit(X, y, epochs=50, verbose=1)Finally, we can use the trained model to predict the next word in the sentence "she drinks cofee in the __".

We will first encode the sentence as a sequence of words using the tokenizer, and then use the model to predict the next word in the sequence.

# Encode the input sentence as a sequence of words

input_sentence = 'she drinks coffee in the '

input_sequence = tokenizer.texts_to_sequences([input_sentence])[0]

# Pad the input sequence to a fixed length

input_sequence = pad_sequences([input_sequence], maxlen=max_sequence_length-1, padding='pre')

# Use the model to predict the next word

predicted_index = np.argmax(model.predict(input_sequence, verbose=0), axis=-1)

predicted_word = list(tokenizer.word_index.keys())[list(tokenizer.word_index.values()).index(predicted_index)]

# Print the predicted word

print(predicted_word)Note: this example uses small text data. Try replicating the example with a larger dataset. Also, create training and validation datasets.

Transformer Model

Transformer models are currently eating the world with the widespread adoption of GPT (Generative Pretrained Transformer) and BERT models.

For this example, we would use transfer learning to build a transformer model that predicts the next word in a sentence.

The first step is to prepare the dataset:

Pre-trained language models like GPT-2 or GPT-3, are large-scale language models trained on massive amounts of text data.

We’ll need a dataset of text input and corresponding labels for the “next word” suggestions. You can use any dataset, such as the Gutenberg Corpus, Wikipedia or any other text corpus. You can then preprocess the dataset by tokenizing the text and splitting it into training and validation sets.

Load a pre-trained transformer model:

You can use any pre-trained transformer model, such as BERT (Bidirectional Encoder Representations from Transformers) or GPT-2, as a base model for our project. You can load the pre-trained model using the Hugging Face Transformers library and extract the necessary layers for your task.

Define the model:

You can define your model as a sequence of layers, starting with an input layer, followed by a transformer layer, and ending with an output layer. You can use the pre-trained transformer layer as the main layer of your model and add additional layers for fine-tuning.

Compile the model:

You can compile the model with a suitable loss function and optimizer. For example, you can use the categorical cross-entropy loss function and the Adam optimizer.

Train the model:

You can train the model on your training data and validate it on your validation data. You can use techniques such as early stopping and learning rate scheduling to optimize the training process.

Test and evaluate the model:

You can evaluate the model on a test set and measure its performance using metrics such as accuracy or precision.

Deploy the model:

Once we are satisfied with the performance of the model, we can deploy it as a web service or integrate it into an existing application. We can use frameworks like Flask or Django to build a RESTful API that exposes the functionality. We can also use libraries like TensorFlow Serving or PyTorch Serving to deploy the model in a scalable and efficient way.

This is a simple example using only a pre-trained transformer model GPT-2

import tensorflow as tf

from transformers import TFAutoModelWithLMHead, AutoTokenizer

# Load the pre-trained model and tokenizer

model_name = "gpt2"

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = TFAutoModelWithLMHead.from_pretrained(model_name)

# Generate some sample text

input_text = "She loves to drink "

input_ids = tokenizer.encode(input_text, return_tensors='tf')

# Generate new text using the language model

output_ids = model.generate(

input_ids,

max_length=50,

num_return_sequences=1,

no_repeat_ngram_size=2,

repetition_penalty=1.5,

top_p=0.92,

temperature=0.85

)

# Decode the output text and print it

output_text = tokenizer.decode(output_ids[0], skip_special_tokens=True)

print(output_text)Further Reading on Natural Language Processing

- Get started with Natural Language Processing

- Morphological segmentation

- Word segmentation

- Parsing

- Parts of speech tagging

- breaking sentence

- Named entity recognition (NER)

- Natural language generation

- Word sense disambiguation

- Deep Learning (Recurrent Neural Networks)

- WordNet

- Language Modeling

Interested in learning how to build for production? check out my publication TreapAI.com