Neural style transfer is referred to as an artistic algorithm that takes two images (in this case, a content image and a style reference image) and blends them to produce an image that looks like the content with attributes that take after the styling of the reference.

In this tutorial, we would use a pre-trained deep learning (Convolutional Neural Network) model to create an image in the style of another one.

TensorFlow Hub is a centralized repository of pre-trained machine learning models. It provides a one-stop shop for developers who want to use machine learning in their applications without building and training their models from scratch.

Mardi Gras is a celebration of the last day before Lent. It’s a festival of food, music, and dance.

It is one of the most famous festivals in the world and it takes place in New Orleans.

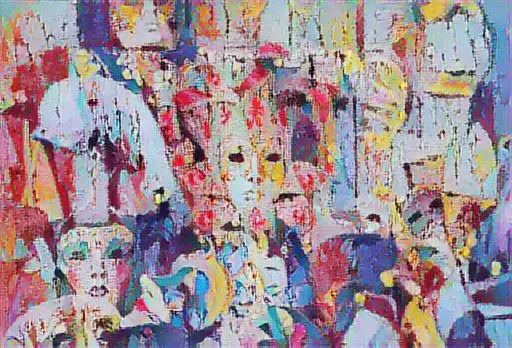

We will use these Madia Gras and Paintings stock photos for the content and reference images.

Style Transfer Environment Setup with TensorFlow on Colab

We would use google colab environment to train and run our deep learning model.

Code reproduced from Tensorflow.org Download Jupyter Notebook

Create a new notebook

setup

import os

import tensorflow as tf

# Load compressed models from tensorflow_hub

os.environ['TFHUB_MODEL_LOAD_FORMAT'] = 'COMPRESSED'import numpy as np

import IPython.display as display

import matplotlib.pyplot as plt

import matplotlib as mpl

mpl.rcParams['figure.figsize'] = (12, 12)

mpl.rcParams['axes.grid'] = False

import PIL.Image

import time

import functoolsCreate a function to

def tensor_to_image(tensor):

tensor = tensor*255

tensor = np.array(tensor, dtype=np.uint8)

if np.ndim(tensor)>3:

assert tensor.shape[0] == 1

tensor = tensor[0]

return PIL.Image.fromarray(tensor)Import the Madia Gras and Painting Images to Colab

content_path_1 = 'content.jpg'

content_path_2 = 'content2.jpg'

content_path_3 = 'content3.jpg'

style_path_1 = 'reference.jpg'

style_path_2 = 'reference_style.jpg'

style_path_3 = 'reference_style3.jpg'def load_img(path_to_img):

max_dim = 512

img = tf.io.read_file(path_to_img)

img = tf.image.decode_image(img, channels=3)

img = tf.image.convert_image_dtype(img, tf.float32)

shape = tf.cast(tf.shape(img)[:-1], tf.float32)

long_dim = max(shape)

scale = max_dim / long_dim

new_shape = tf.cast(shape * scale, tf.int32)

img = tf.image.resize(img, new_shape)

img = img[tf.newaxis, :]

return imgdef imshow(image, title=None):

if len(image.shape) > 3:

image = tf.squeeze(image, axis=0)

plt.imshow(image)

if title:

plt.title(title)content_image = load_img(content_path_1)

style_image = load_img(style_path_1)

plt.subplot(1, 2, 1)

imshow(content_image, 'Content Image')

plt.subplot(1, 2, 2)

imshow(style_image, 'Style Image')Import TensorFlow Hub to use a pre-trained model

import tensorflow_hub as hub

hub_model = hub.load('https://tfhub.dev/google/magenta/arbitrary-image-stylization-v1-256/2')

stylized_image = hub_model(tf.constant(content_image), tf.constant(style_image))[0]

tensor_to_image(stylized_image)content_image = load_img(content_path_2)

style_image = load_img(style_path_2)

plt.subplot(1, 2, 1)

imshow(content_image, 'Content Image')

plt.subplot(1, 2, 2)

imshow(style_image, 'Style Image')import tensorflow_hub as hub

hub_model = hub.load('https://tfhub.dev/google/magenta/arbitrary-image-stylization-v1-256/2')

stylized_image = hub_model(tf.constant(content_image), tf.constant(style_image))[0]

tensor_to_image(stylized_image)content_image = load_img(content_path_3)

style_image = load_img(style_path_3)

plt.subplot(1, 2, 1)

imshow(content_image, 'Content Image')

plt.subplot(1, 2, 2)

imshow(style_image, 'Style Image')import tensorflow_hub as hub

hub_model = hub.load('https://tfhub.dev/google/magenta/arbitrary-image-stylization-v1-256/2')

stylized_image = hub_model(tf.constant(content_image), tf.constant(style_image))[0]

tensor_to_image(stylized_image)Final Images – A Masterpiece