Fundamentals of Machine Learning

Machine learning (ML) is beyond an internet buzzword, and the possibilities it promises are nothing short of fantasy and science friction.

At the end of this article, my objectives are to open you up to the world of machine learning.

We will start with some definitions, take a short trip in the machine learning memory lane, explore some exciting research.

From there, we will peep into “who is using ML in production” and see the prerequisites required to get started.

We will also discuss the different approaches to solving ML problems.

We will look at the platforms and frameworks used in coding for ML.

Lastly, we get to chat about what next?

This article is a long read, and you won’t be writing code. Grab something to drink or eat, sit back and enjoy 🙂

What is Machine Learning?

I like to define Machine Learning (ML) as the science of getting a computer to learn to solve a problem by experience, just like humans without explicitly instructing it. Similar to how humans learn with experience, computers are fed with data instead.

Machine learning is a sub-field of Artificial Intelligence (AI) that focuses on how computer algorithms automatically learn and improve their accuracy without being explicitly programmed.

The goal is for computers to learn with no human intervention.

Machine learning is a branch of Artificial Intelligence (AI) and Computer Science.

ML is used in diverse applications and found helpful in almost every field: medicine and health, transportation, education, entertainment, finance.

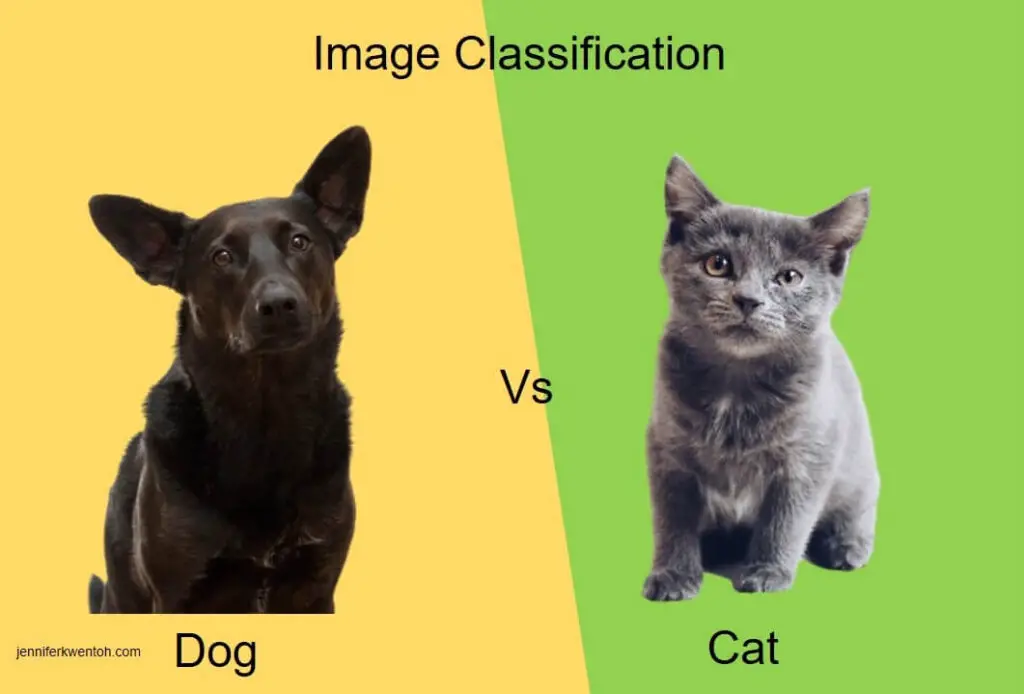

ML thrives in solving problems that are extremely difficult for conventional programming logic. For example, Image classification.

In the example below, classifying if an image is a dog or a cat.

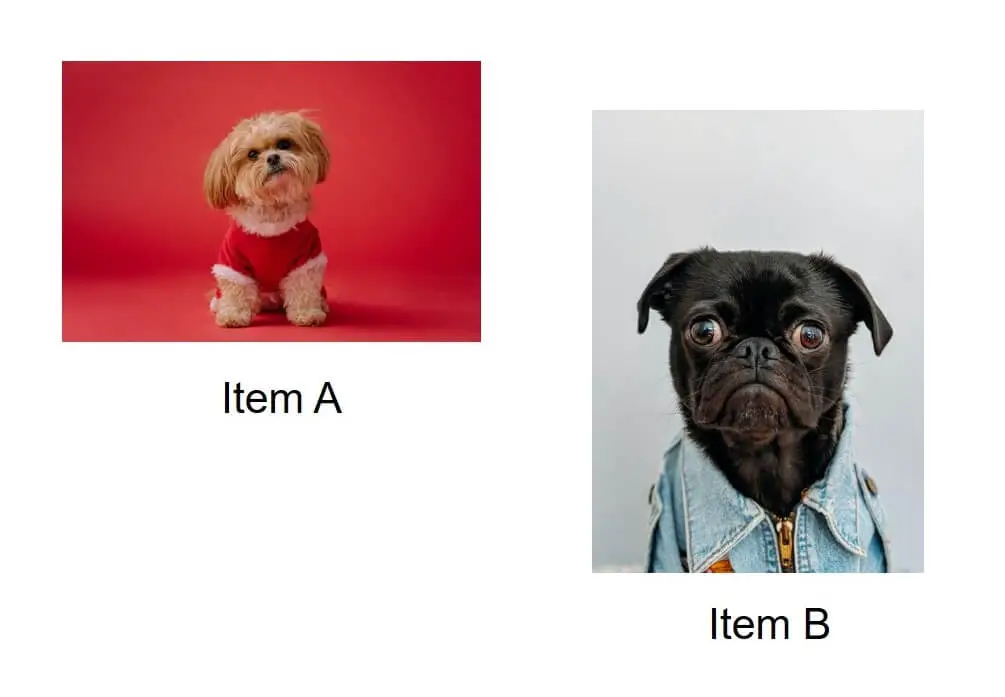

Writing code to recognize and differentiate images can be complicated. Even If we find a way to perfectly describe the appearance of a dog in item A to the computer, what happens when we show the picture on Item B to the same algorithm?

Not all dogs have a long tail. Some dogs are hairy, and some are not hairy.

The algorithm will be thousands of If-else statements if we try building it out using traditional programming. And yet, it won’t still be accurate.

In image classification, the goal is to identify images of a particular object or thing accurately. Using traditional programming, some photos of dogs will be falsely classified as cats and vice versa.

Machine learning solves this using a different approach. Instead of instructing the computer on every step, we feed the computer many additional images of dogs and cats.

The algorithm learns over time the characteristics that genuinely describe a dog and a cat.

Generalization

The goal of a machine learning model is not to memorize the data but to generalize. In Machine learning, algorithms train on a sample data known as training data. The model created is tested with a testing dataset.

ML is closely related to some fields like Data Mining and Statistics. Some parts of ML focus on solving problems like predictive analysis, which is a statistical problem. But not all of the machine learning is statistics. Machine learning requires training and testing data, and so knowing how to mine the correct data is a plus for ML Engineers.

Other related fields are Linguistics, Data Engineering, and Software Engineering, etc.

Why Machine Learning is Important?

ML has been used to solve simple and basic tasks to challenging scientific problems. The ability to solve complex problems is one of the main attractions of machine learning.

The availability of high computational power and large volumes of data are the reasons behind the recent ML-powered products.

ML is used in business operations to segment and understand customers’ data. ML is used to optimize and automate routine tasks, mitigate risks, classify fraudulent and non-fraudulent scenarios, predict financial and market trends, recommend products to customers, improve existing tedious processes. Businesses have been able to enact intelligent decisions and stay ahead of the competition because of machine learning.

Machine Learning History

Just like every other scientific field, ML has a great history. From the popular Turing tests by Alan Turing in the 1950s, John McCarthy and the Dartmouth conference in Summer 1956, the dreadful AI winter in the 1980s, to the first AI (AlphaGo developed by Deepmind) that beat the world best GO player in 2016. And more recently, GPT-3 an autoregressive language model designed by OpenAI.

In the 1950s, Alan Turing created the famous “Turing Test.”

For a machine to pass the test, it has to convince a human that it is another human and not a computer.

Arthur Lee Samuel, a pioneer researcher in “game technology” and artificial intelligence, in 1952 designed the first computer game that learned as it played the game checkers. In 1959, he coined the name “machine learning.”

The first artificial neural network was designed in 1958 by Frank Rosenblatt.

In the 1960s, Nilsson N. J.’s book on “Learning Machines” theorized pattern recognition and pattern classification possibilities.

The late 1980s and 1990s witnessed a massive collapse in the field. Research funding dried up. Big corporations that financed AI research withdrew interest.

However, things bounced back, and there was a big win in 1997 when the IBM deep blue, a chess-playing AI, beat the world champion, Garry Kasparov, in a chess game.

More and more groundbreaking research has since sprung up.

Some Interesting research in Machine learning

Since the end of AI winter, we have seen more exciting research. Thanks to high computing power and the internet that make big data available.

AlexNet (2012)

AlexNet is a deep convolutional neural network that won the 2012 ImageNet Large Scale Visual Recognition Challenge.

The neural network classified 1.3 million high-resolution images in the LSVRC-2010 ImageNet training set into 1000 different classes, with over 500,000 neurons consisting of eight layers, five convolutional layers, and three fully-connected layers.

Read the paper here by Alex Krizhevsky, Ilya Sutskever, Geoffrey E. Hinton.

See code implementation.

GPT-3

The GPT-3 released at the peak of COVID-19 pandemic lockdown was one of the highlights of 2020. GPT-3, short for Generative Pre-trained Transformer 3, is a language model that produces human-like text. GPT-3 succeeded the GPT-2. The OpenAI research laboratory creates the language model.

OpenAI mentioned on its website that over 300 applications are currently powered by GPT-3. Delivering search, conversation, text completion, and other advanced AI features through their API.

Microsoft ImageBert

ImageBert is a pre-trained model that combines natural language processing (NLP) and computer vision (CV) to translate image-text. Researchers created it at Bing Multimedia Team, Microsoft. Di Qi, Lin Su, Jia Song, Edward Cui, Taroon Bharti, Arun Sacheti.

Read the paper here ImageBERT: Cross-modal Pre-training with Large-scale Weak-supervised Image-Text Data.

Machine learning in action

Away from research, who is using ML in production?

It is most likely you are consuming ML products already. Big and small organizations are incorporating ML into their products.

Google uses Machine learning to sort out spam messages.

Facebook uses ML in ads placement.

Grammarly is a writing assistant that helps you compose writings with better grammar and checks for spelling errors.

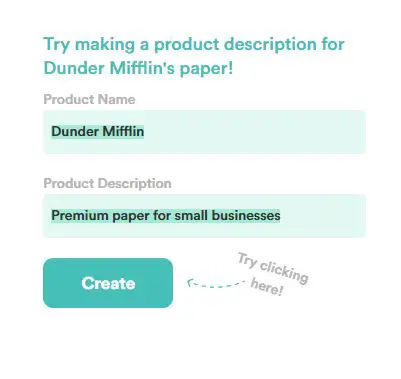

CopyAI is an excellent tool for copywriting. If you have writer’s block or stale copy, typing a few words about the content you are working on can autogenerate words.

Machine learning prerequisite

Statistics

Statistics is the discipline concerned with collecting, organization, analysis, interpretation and presentation of empirical data.

Machine learning largely depends on data, and Statistics is a discipline that focuses on data.

Some tools and concepts used in statistics can be handy for machine learning.

For example, descriptive statistics are used to transform raw data into useful information.

These essential concepts include “mean,” mode, median, skewness, standard deviation, outliers, etc.

Having a good basic knowledge of statistics will help you understand data and extract information from data.

Mathematics: Linear Algebra, Calculus, Probability

Mathematics helps to understand the underlying fundamentals of machine learning algorithms.

Calculus

Calculus plays an essential role in designing ML algorithms.

Some topics include:

- multivariate calculus

- Derivative

- Gradient and Gradient descent

- Chain rule

- Vector calculus etc.

Probability

Probability describes the likelihood of an event occurring.

Some topics include:

- Probability distribution

- Rules of probability

- Random Variables

Algebra

Linear Algebra deals with vectors, matrices, and linear transformations.

Some topics include;

- Variables, functions and coefficients

- Logarithms

- Linear equations

- Matrix multiplication

Programming

Some programming languages like Python, R, Scala, Matlab, and Javascript can code for machine learning. Python and R are the most popular and widely used.

To learn Python, check out my free tutorials on DailyCodingDev.

Prerequisite for ML in Production

Data Modelling and Data Analysis

If your focus is on applied machine learning and building ML models for production, this should be one of your primary prerequisites.

If your goal is to build and deploy models in production, you don’t need to be an expert in calculus and math.

You need a good knowledge of collecting, cleaning, aggregating, exploring, and visualizing data.

You will spend a lot of time preparing and exploring your data.

Some topics include:

- Data collection

- Exploratory data analysis

- Data cleaning

- Modelling

- Data visualization

However, there is no need to be an expert on these prerequisites to start machine learning.

Machine Learning Approaches

Supervised learning

Supervised learning is a learning approach that tries to model relationships between the output variable (target/labels) and the input variable (features) of a given dataset to accurately predict output labels in a new scenario based on what it learned.

The goal is to map an input value (x) to the desired output value (Y)

Y = f(X)

The job of the learning algorithm is to generalize and not to memorize the training data.

Generalizing helps the algorithm to perform well in an unseen environment.

Supervised learning problems can be grouped into classification and regression problems.

Some supervised learning algorithms include;

- Support vector machines

- Linear regression

- Logistic regression

- Decision trees

- Naïve Bayes

- K-nearest neighbor

- Linear discriminant analysis (LDA)

- Similarity learning

- Neural Networks

Unsupervised learning

An unsupervised learning approach is used when there is no output label. The learning algorithm models the distribution of the data in other to learn about the data. Here the algorithm learns patterns.

Unsupervised learning problems can be grouped into association and clustering problems.

Some algorithms include;

- K-means

- Apriori algorithm

Semi-supervised learning

The semi-supervised learning approach tackles problems with the combination of techniques from supervised and unsupervised learning.

For example, when there are not enough labeled data, the unsupervised technique can learn about the scenario. In contrast, the supervised method models the existing relationship.

Reinforcement learning

Reinforcement learning aims at training a model to make a sequence of decisions. This approach employs trial and error. An intelligent agent is tasked to perform a specific activity. It is either punished for failing or rewarded for succeeding. The reinforcement learning approach is robust and used in creating some of the sophisticated machine learning products, e.g., self-driving cars, robotics, AI games e.t.c

Summary of ML approaches

We learned about the approaches to tackle machine learning problems.

They are;

- Supervised learning

- Unsupervised learning

- Semi-supervised learning

- Reinforcement learning

Machine learning frameworks and platforms.

Some of the platforms and frameworks used in building for machine learning include;

- Pytorch – Open source machine learning library developed by Facebook AI lab. visit here

- Tensorflow – Open source library for ML. visit here

- Scikit Learn – Open source ML library for python programming. visit here

- SpaCY – Open source library for Natural language processing

- AfriLang – Open source library for Natural language processing

- Python, Scala, R – Programming language

- Theano – Python library for numerical computation

- AWS Sagemaker – A cloud ML platform

- Google ML toolkit – Cloud ML platform

- Anaconda – Ml and data science package managment

- IBM Watson Studio – Cloud ML platform. visit here

- Google Colab – Cloud jupyter notebook environment

Get started learning in ML

Communities and online learning have decentralized machine learning education. Even without a master’s degree in computer science, people have learned, built products, and researched.

Where to learn online

- My own machine learning series.

- Stanford Machine learning course on Coursera by Andrew Ng.

- edx ML course.

- Pluralsight machine learning courses

I lead an AI community, and I have learned more in meetups and group discussions.

Also, check out the list of machine learning and data science communities across the world.

Bonus: Some Machine learning terms and definitions

- Training vallidation and test dataset: Data used to train, validate and test a model.

- Data cleaning: The process of detecting and correcting errors, filtering noise and inaccuracies in a dataset.

- Overfitting: When a model memorizes a training dataset by capturing noise.

- Underfitting: When a model is unable to capture the true properties of a dataset.

- Regularization: Used to prevent a model from overfitting.

- Ground Truth: Used to check the accuracy of a model against the real world.

- Neural Networks:

- Deep Learning: Neural networks with multiple layers.

- Computer Vision: Computer Vision is an interdisciplinary scientific field that deals with how computers understand images.

- Natural Language Processing: NLP, Natural Language Processing is an interdisciplinary scientific field that deals with the interaction between computers and the human natural language.

- Artificial General Intelligence:

- Bias

- Noise

- Active learning

- Perceptron

- Classification : used in predicting discrete values. E.g Spam or no spam

- Regression used in predicting continuous values. E.g Weather prediction

- Curse of dimensionality

- Random forest

- Clustering

- Association rules

See full Glossary for more terms and definitions.

Conclusion

Hey! You made it to the end. 🎉

In conclusion, we discussed the definition of machine learning, discussed why ML is essential. We took a short trip down to ML history and returned to recent research and products in production. We also looked at prerequisites to get started and approaches to solving problems. Lastly, we looked at a list of some popular frameworks and how to start learning.

I hope you enjoyed this as much as I did.

You can subscribe to my newsletter to get weekly exclusive machine learning tips.

Follow my Machine learning series here.

Follow topics on Natural language processing start here

Stay safe

![10 Best Open-source Machine Learning Libraries [2022] 10 Best Open source Machine learning Libraries [2021]](https://jenniferkwentoh.com/wp-content/uploads/2021/07/10-Best-Open-source-Machine-learning-Libraries-2021-324x160.png)